TL;DR

Most companies leave a gap between development and security. Developers move fast, and infosec steps in too late, when issues are already hard and expensive to fix.

A new role—DevSec—fills that middle space. DevSec catches insecure patterns early, filters noisy alerts, guides developers with simple and practical advice, and prevents small mistakes from becoming real vulnerabilities. It’s not a replacement for dev or infosec, but a missing function that keeps products safer, reduces rework, and helps teams move faster with fewer surprises.

2025-12 – By Chris Sprucefield.

Most companies still separate development and security into two distant groups. Developers build features, ship code, and keep things going. Infosec teams respond to alerts, run scans and write long lists of issues that often arrive too late in the development cycle to fix without disruption.

This split leaves a gap in the middle.

In the meanwhile, nobody is watching the small decisions and habits that create security risks long before anyone notices them. By the time a formal security review happens, the code has settled and dependencies have grown. The system has become harder to change, and at that point, problems are expensive, frustrating, and often pushed aside because deadlines are tight.

We need a role that fills this gap.

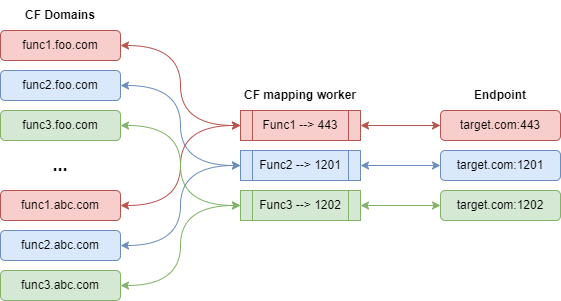

For now, I’ll call it DevSec—not an existing title, but a new class of function designed to sit between development and traditional infosec, focused on preventing problems before they turn into incidents or audits.

What DevSec Is (and Isn’t)

- DevSec is not a developer who happens to care about security.

- DevSec is not an infosec analyst who steps in after the fact.

- DevSec is not a pipeline engineer building scanners or automations.

Instead, DevSec is a practical, hands-on generalist who understands enough about code and coding in general, and enough about security to evaluate risks as they appear and is reported by supporting tools or reviews, not months later. They don’t need deep, specialized expertise in every system, but they need the ability to look at a piece of code or an alert and decide:

- Is this threat real, or is it noise?

- Could this pattern cause trouble later if not fixed?

- Does this issue affect our actual product or environment?

- What is the simplest fix?

- … and how do we prevent it from recurring?

The point is not to replace security teams or developers. The point is to augment and support devs at an early stage, prevent avoidable work and avoidable failures by catching issues early – when they are still easy to fix.

Why This Role Matters

1. Developers aren’t meant to be full-time security analysts

Most development teams already deal with tight timelines. Handing them a long list of scanner warnings only slows them down. They need someone who filters out the noise and highlights the few things that truly require attention.

2. Traditional security looks at problems too late

Security teams often depend on completed features, logs, or external scans. They step in only after code is written, patterns are set, and risky habits have already spread through the codebase. Furthermore, traditional infosec teams does often does not have the budget for this, and are they are typically ill-equipped to review code or being very hands-on, as their primary focus is typically on process, procedure and higher level systematic security.

3. The space in between is where most vulnerabilities are born

Unsafe defaults, repeated shortcuts, overly permissive functions, straight AI copy and paste issues, SQL injections and many other common bad or lazy practices, and forgotten test logic – these are the seeds of future incidents, and they form quietly in day-to-day development, especially when the pressure to deliver is high, or perhaps, the development team is young.

A DevSec function sees these before they harden into real vulnerabilities.

What DevSec Actually Does

Here’s what this role focuses on:

- Reviewing code for insecure patterns without requiring full developer depth.

- Triaging alerts from automated tools to identify what matters and what doesn’t.

- Spotting bad practices early and nudging the teams to correct them.

- Explaining risks in simple, practical and actionable terms.

- Offering targeted suggestions for fixing problems now and avoiding them later.

- Keeping the security posture aligned with how the product actually works.

This is early-stage, practical prevention—not bureaucracy, not policy writing, and not firefighting.

The Benefit to the Whole Team

With DevSec in place:

- Developers get fewer false alarms and clearer guidance.

- Security teams receive fewer late-stage surprises.

- Risk is primarily handled at the point of creation instead of after release.

- Bad habits are corrected early, reducing long-term maintenance pain.

- The product becomes naturally more secure without slowing down delivery.

- When there are external threats, Developers will get help to determine the focus for fixes.

This helps companies avoid the familiar cycle of security issues suddenly piling up right before an audit, or surfacing only after customers report something unexpected.

A nice side-effect is that it is highly likely to save money for the company by less late-stage costly fixes and revisits (time that can be spent on developing products), all while delivering a safer product, which in turn will improve the goodwill and market reputation among it’s customers.

Why DevSec Is Needed Now

Many companies are now building faster, integrating third-party tools constantly, and relying heavily on automated systems. The pace of change means small missteps compound quickly. Traditional security functions can’t keep up with that pace if they’re only brought in late. Developers can’t shoulder responsibility for everything either—they’re not equipped, and it’s not realistic.

Classic Infosec teams primary focus is on the bigger picture, processes and procedures, and while very good at what they do, they are typically not very hands-on.

The midpoint has been empty for too long.

A dedicated DevSec role fills that gap and brings steady, ongoing security awareness into the daily rhythm of development, without overwhelming anyone.

This isn’t about introducing another layer of process. It’s about putting someone in the spot where issues actually appear—right where code is written, habits form, and risks begin.

DevSec is the missing piece that makes that possible.

(C) (BY) EmberLabs® & Chris Sprucefield

Lines of Code aren’t a quality metric, but they are a cognitive one.

Lines of Code aren’t a quality metric, but they are a cognitive one. … and keeping them out of the code in GIT?

… and keeping them out of the code in GIT?