Thoughts on AI, Security and practical day to day use.

Thoughts on AI, Security and practical day to day use.

As mentioned before, I am involved in R&D on a similar branch with “AI Hardware“, and this brings me to the AI and it’s more general use.

These days there is almost a competition going on out there about the use of AI wherever possible, regardless of whether it’s needed, practically usable, it actually serves a purpose or not, and it’s quite understandable because it’s quite hard to sell a product and be competitive without having the word AI crammed in somewhere in the sales pitch these days.

So let’s have a little bit of a pragmatic look at it.

So what is an AI?

Most of the Ai’s today are LLM’s (Large Language Models) based on software that emulates neurons and uses large masses of data for training material (internet).

There are basically three models and how you train the AI’s, but most importantly no AI’s are trained on the fly because that would effectively destroy the neural network setup while in flight as is now.

We are simply not there with dynamic AI LLM’s, just yet..

You would only retrain the models based on existing data plus any additional data gathered during training sessions, as the training is very taxing on computational and financial resources.

this in turn means that live leakage has a very low risk but the risk for future leakage is still there due to the incorporation of training material that may be gathered from questions and other supplied data.

- The basic training model is the AI gets a set of data and trains itself on it without supervision.

Obviously this is not a very good way to train a model and the outcome is rarely what you wanted to be, but all starts with this one.

- The second model is supervised training where you give it hints as to what is correct or not, sometimes rules that must be met, encouraging the model to make the right decisions, or tweak its output to match. This model is the one typically used in large language model training, where more expressive solutions and reasoning is required based on the input, as this model offers a greater deal of freedom compared to other third model. It also allows foor evaluation of incorporated 3rd party data as part of the input to create a reasonably balanced, but not always correct output.

You can quite easily force the model to provided an incorrect answer by forcing it to accept incorrect source data by saying that you can’t deviate from this.

- The third model is the most restrictive, where you have “punishment” involved, essentially killing the models that gives the wrong answer against the set of fixed rules during the training, letting only the ones that provides the most correct answer live for further refinement. This model is typically used in image recognition and similar tasks, and is the one typically applied in machine learning where you have a fixed output that you need to match against and the AI is set to recognise with either a correct result or not a correct result as an output, or where you need to identify an object.

As always, there are of course variations to the above, but it gives you a rough insight as to what it is and how it works.

A little bit of history from a developer’s perspective.

In the past when there were only books and manuals, the developers had to relate to these, and often know them by heart to actually use it. The amount of information was quite limited and it was fairly easy. Essentially everything was written from scratch.

As we know, history happened, and Internet came to be, and with it, things like Google. Open source solutions exploded, and then came the help sites to go with it and anything development.

Sites like stackexchange and many others came to be and code samples were shared between the users. Because of the perceived security risks, many developers were banned by the companies to use Internet to search for solutions, even for common problems because of the “risks” involved, as you could get “hacked” by a copy/paste, or you could leak information about your ip or other precious items.

This even in cases where it was generic information such as an error code and what caused it in regards of a specific product. Eventually, “internet” was generally accepted, and came to be part of everyday business life.

The primary risk of this was/is , as always, related to anyone who uncritically made a true full copy/paste after providing enough specific enough information for a possible hacker to write a malicious piece of response that would work in the specific environment and solution, and the user subsequently, without any consideration or review, and there being no peer review on commits, implemented this in the production code.

The exact same can be used and said for any AI, whether it’s in app, typically a developer IDE, or external like ChatGPT’s website, and many infosec teams seems to ignore the fact that you can search and look up search terms on Google to see what’s being searched, providing the exact same purported leakage mechanism, near, if not real-time, whereas this actually and typically does not apply to AI’s. Never mind questions on a website like stack exchange that it will be forever in fulltext, where the AI question will be ephemeral and not be reused as a verbatim ready made answer, despite it possibly becoming part of the training material at some point down the line, as numerical weights, and not actual full-text.

In short – an AI will not be able to recall or reproduce a specific question from another user because that’s simply not how the AI and LLM’s works. The sessions work in isolation, but the data may later be used as training, as numerical “weights” for a specific item, never as plaintext data.

Why the AI instead of “Google”?

As Google and others are mainly about static content, the AI is highly dynamic and can actually understand what you want, and quickly narrow down the answer to what you need, without all the “fluff” and having to wade through endless amounts of text and sites to get what you were looking for, and they can do this by incorporating third-party sources or doing searches on your behalf to gather the information, and this is exactly what makes the AI so useful – speed and the output limited to what you actually asked for, and this is why the AI is quickly becoming the “Google” replacement.

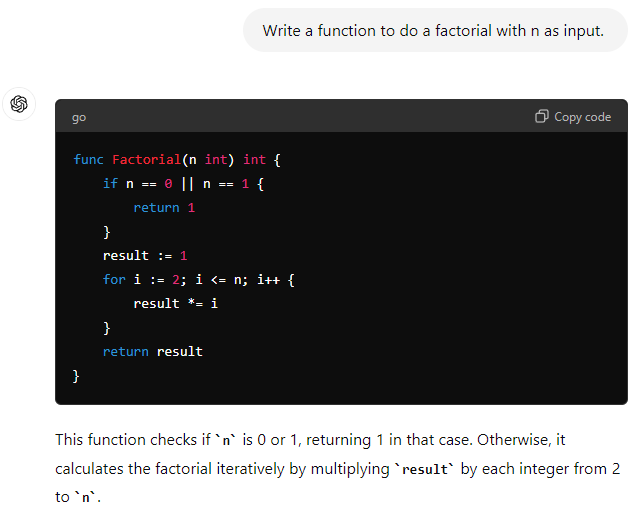

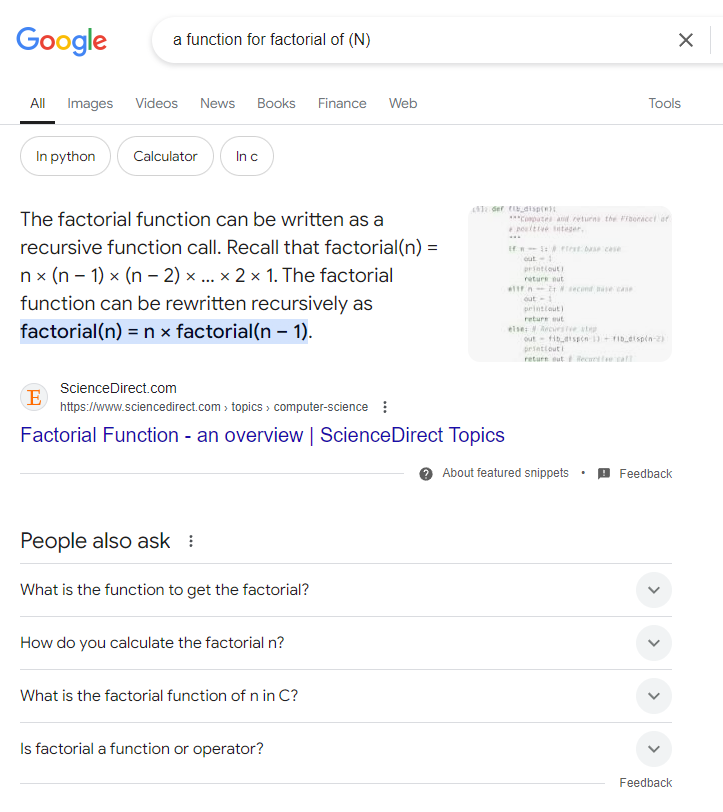

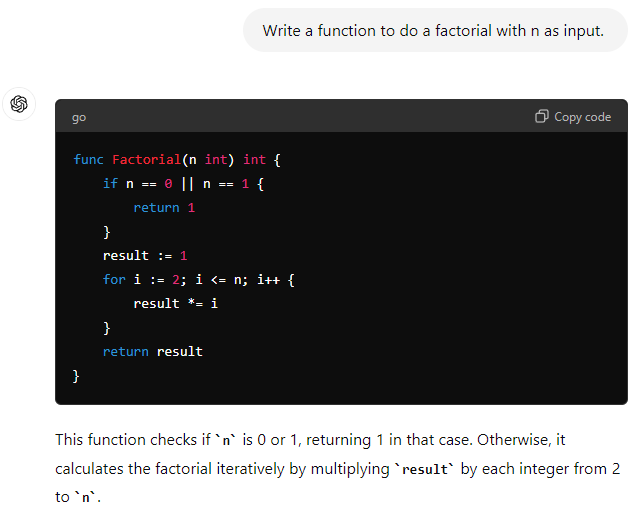

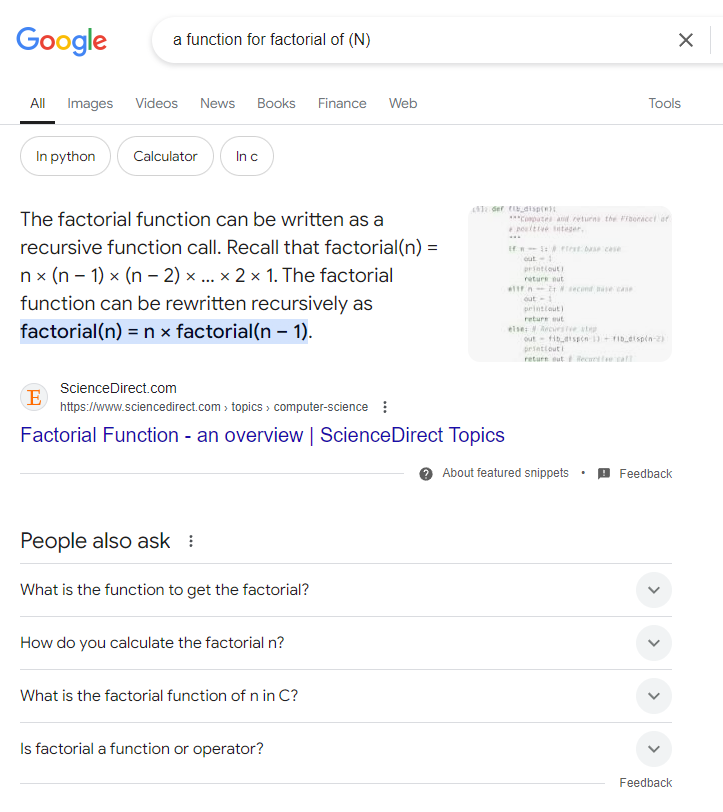

An example:

Compared to Google et al, If you’re stuck in a problem, you can describe the type of problem you have and get the reasoned argument with explanations specific to the problem on how to solve it, without you actually assembling and parsing the information yourself, something that can be very tedious.

AI:

(This passes my sanity inspection as a proposal for a solution…)

…. versus Google:

What about Information Security then?

A couple of ground rules when it comes to dealing with AI’s:

- You should be careful with what you share – keep it “anonymous”.

- Don’t share more than you absolutely need to.

- NEVER share credentials or personal data.

- NEVER assume it that the answer is correct – only use it as a guideline or example.

Again, always be the second opinion – never just copy/paste – actually look at what was presented and make your own informed decision of – does this make sense, and never assumed that the answer is 100 percent correct.

This is what any responsible developer would do, and if there was malicious intent it would be far easier to do it themselves, right there, rather than go to the AI to get it done, as the developerwould not need to explain to the AI what the environment looks like and how the specific exploit should be implemented. That would be information you already have.

The goal of the Infosec team here is to rather than just ban the users from using it, embrace it, but educate the staff about how to use it safely!

Prescribe the pragmatic safer ways on how you can interact with the AI’s, because in the end they are incredibly useful tools that will not go away, just like Internet didn’t go away and eventually was forced to be accepted despite the security teams kickings and screamings.

Trust me, it will be used no matter what anyone say, because it is just too useful not to use, and the likelihood of this happening is even higher in time and resource pressured teams, where a lot of tedious work can be simplified and done very quickly compared to the alternatives, and it is far better to have a mutual understanding of good practices, do’s and don’ts, rather than a skunkworks divisions.

Additionally, keep in mind that it is an indisputable fact that the absolute majority of security tools today use AI, be that code monitoring and validation, security tools like antivirus, api scanners and many others, inspecting code, classified document files etc regardless of security markings, on pretty much any hardware the company owns or maintains. It’s already there, and if there was a leak you would likely not know about it until way too late (specifically looking at you, MS Copilot), and such an event would be a much bigger possible threat than the occasional use of AI for a specific purposes with properly trained staff.

All these modern security tools are entirely based on AI or AI input / processing, and all will suffer the same issue of possible data leakage, one way or the other.

Let’s be very clear about something here:

Any tool that claims it will not be using the customer data, is simply marketing hype and lies, because if they did not, they would soon find themselves out of business as they would not be able to follow the evolvement of code and security threats, compared to their competitors. All the talk about “secure models” etc, is marketing fluff. Where do you think their current training material actually comes from?

Hint: They didn’t invent it…

If you deploy any of these AI security tools for wholesale scanning of the company IP, it makes absolutely no sense to at the same time unconditionally ban the use of AI’s for the developers or other creative staff, because as mentioned before, staff training on proper use is the absolute key here, and a kneejerk ban because you’re afraid of possible unknowns, is absolutely NOT the answer, as all you will achieve is to create an unsafe skunkworks project. Like it or not. It’s reality…

Takehomes for the security team:

- This is something that is here,

- You can’t ignore it,

- You can’t make it go away,

- It’s here to stay.

- Accept the fact.

Deal with it!

The only reasonable thing you can do at this point, is to accept “defeat”, just as you eventually had to with the emergence of the internet, and train your staff in the reasonable use, protecting the company ip and personal data, making sure that security is covered by providing working guidelines of do’s and don’ts, allowing an agreed controlled use rather than the chaotic underground skunkworks model that otherwise will emerge regardless of what you say, and over which you will have absolutely no control.

Never mind the fact that you will effectively “outlaw” most, if not all modern developer IDE’s, which… is commonly based on AI support, in part or full using their code models, relegating them back to notepad or similar “development” tools.

Trying to ban the use of AI, will be as effective as the 1920’s prohibition was… (NOT!).

Then what?

You should consider specific services (and I am not plugging anyone here) like ChatGPT’s enterprise model, where you can actually get the benefits and control security/privacy, yet, prevent any leakage and reuse for training.

If you can save an hour a day per dev, increaseing the productivity of them, this will be an easy expenditure for you to qualify the benefit of, where you gain control over what is done, how it’s done, who does it, on what basis they do it. It’s a dual win-win that will gain acceptance.

If you can’t beat them, be pragmatic and join them, making sure it’s done responsibly…

![]()

Thoughts on AI, Security and practical day to day use.

Thoughts on AI, Security and practical day to day use.

Imaginary Concept image illustrating a concept of reconfigurable computing using current and future concepts.

Imaginary Concept image illustrating a concept of reconfigurable computing using current and future concepts.